Introduction

GPT-5 can reason, follow complex instructions, call tools, and coordinate sub-tasks. To get production-quality answers consistently, make the task unambiguous, constrain the output, and give the model space to think only when it’s worth the latency. This guide gives you practical patterns you can paste into code or playbooks today.

Core principles

- State the goal first. One sentence that defines “done”.

- Constrain the canvas. Format, length, style, variables, acceptance checks.

- Prefer machine-readable outputs. JSON beats prose when you automate.

- Right-size the “thinking”. Light/medium/deep depending on stakes.

- Show one gold example. Few-shot is the fastest path to consistent tone.

Prompt skeleton (drop-in)

<goal> Summarize the incident ticket for executives.

<style> Plain, neutral. No hype.

<format> JSON object following the schema below.

<constraints> No speculation. Cite sources if present.

<reasoning> medium

<schema> {

"title": "string",

"severity": "P1|P2|P3",

"summary_bullets": ["string"],

"owner": "string"

}

<input> {{ticket_text}}Everything else in this guide builds on that structure.

JSON & output contracts

Contracts make your pipeline robust. Validate them like any API response.

<output-only> Return only JSON that satisfies this schema; no extra prose.

<schema> {

"id": "string",

"facts": ["string"],

"risk_score": 0-100

}<format> Markdown section order: Summary → Details → Next steps.

<constraints> Max 120 words in Summary. At most 5 bullets in Next steps.Few-shot & style transfer

Show one perfect example; the model will mirror tone, structure, and level of detail.

<example>

input: "Add SSO to our app"

output: {

"title": "Ship SSO with OIDC",

"tasks": ["Choose IdP", "Register app", "Callback route"],

"owner": "Platform"

}Prefer one or two curated examples over many noisy ones.

Reasoning effort dials

Use deeper thinking only when the task truly benefits.

<reasoning> light # transforms/extraction

<reasoning> medium # most product use-cases

<reasoning> deep # planning, code generation, multi-step workflows<checks> List 3 risks; verify constraints before final answer.Task decomposition (planner → worker → critic)

Break complex tasks into small, verifiable steps. Each step has its own micro-contract.

Planner: expand request → steps → tools → acceptance checks.

Worker: execute each step; honor output schema.

Critic: run checks; return PASS/FAIL with reasons and delta fixes.Tools & retrieval

Ground the model in live or private data and automate actions.

tools:

- name: "searchDocs", args: { q: string, k?: number }

- name: "createTicket", args: { title: string, body: string }

policy:

- 1) Call searchDocs with user intent; summarize top results.

- 2) If missing KB article and intent is "bug", draft and call createTicket.

- 3) Final answer: summary → links → next steps.Agents as tools

Wrap a capability into a specialized agent and expose it like any other tool.

agent: "DataCleaner"

inputs: { "csv_url": string }

output: { "columns": ["string"], "issues": ["string"], "clean_csv_url": "string" }Evaluation, extraction & guardrails

- Golden sets: freeze 20–100 hard prompts with expected outputs.

- Field checks: schema validation, toxicity filters, cost/latency budgets.

- Self-checks: require the model to assert assumptions & uncertainties.

<self-check> List unknowns and confidence (0–1). If confidence < 0.6, ask for more input.Copy-paste patterns

1) Delta edit (non-destructive rewrite)

{ "source": {{text}},

"change": "Convert to a 120-word LinkedIn post with 3 bullets and a CTA.",

"constraints": ["Preserve product names", "No emojis"] }2) Summarize to JSON

<goal> Summarize support email for CRM.

<format> { "intent": "refund|bug|question", "sentiment": "pos|neu|neg", "bullets":["..."] }

<reasoning> light

<input> {{email_text}}3) Code planner → implementer

Step 1 (Planner): Produce a plan with functions, signatures, and tests.

Step 2 (Worker): Implement only the next function per the plan; output patch diff.4) RAG answer with citations

<goal> Answer using only the provided documents.

<format> Markdown with footnote citations.

<constraints> No outside knowledge; say "insufficient context" if needed.Common pitfalls

- Unbounded prose — use JSON or strict markdown sections.

- “Do everything” prompts — split into planner/worker/critic steps.

- Too deep reasoning on trivial tasks — wastes cost/latency.

- Missing acceptance checks — add self-checks and contracts.

- One-off prompts — templatize and evaluate against a golden set.

FAQ

When should I use deep reasoning? Planning, multi-hop answers, and code design. Otherwise keep it medium or light.

Few-shot vs instructions? Use both: instructions to constrain, one gold example to set tone & structure.

How do I keep costs predictable? Cap context size, cache retrieval, pick the lowest reasoning effort that passes evals.

Conclusion & next steps

Reliable prompting is about discipline: clear goals, tight contracts, the right reasoning depth, and small agents that do one job well. Start with the skeleton, add a gold example, then build a tiny eval set and iterate. Your future self (and your users) will thank you.

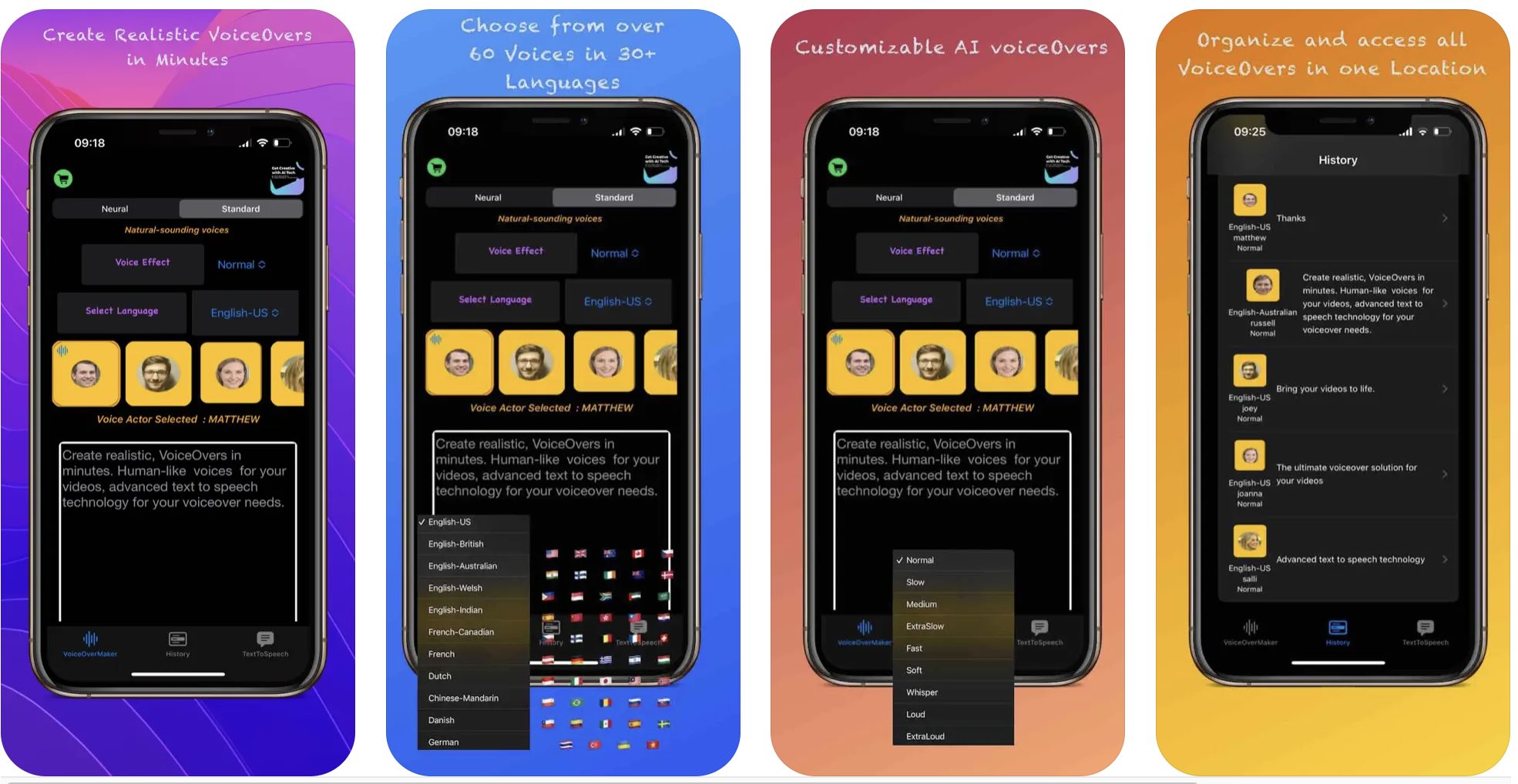

Related: AI Voice Generator • Shorter GPT-5 guide